AI Harassment Risks Aren’t New, But They’re Now Easier Than Ever

When we think about workplace harassment, AI might not be the first thing that comes to mind. Sure, you’ve seen the headlines about deepfakes or AI-generated images, but for many people, that still feels like something happening “out there,” not in our office, Slack channels, or group chats.

The truth is, AI hasn’t invented brand-new ways to harass, bully, or intimidate. Those risks have been around for years: Photoshop, reverse image search, or even plain old gossip. What’s changed is the speed, accessibility, and realism. Creating something convincing used to require technical skill, expensive tools, and time. Now it can take two minutes, a free app, and zero expertise.

That’s why conversations about AI in the workplace need to go beyond innovation and productivity. Even if your harassment policy already covers things like inappropriate content, impersonation, or doxxing, AI raises the stakes. It makes it easier for people who might never have considered crossing a line to do so—and for harm to happen faster when they do.

The Gray Area: Where “Just for Fun” Turns Into Harm

The trickiest space isn’t the obviously bad stuff, it’s the “we thought it was funny” zone.

Think about the difference between a formal email and a quick Slack message. When communication feels casual, especially in hybrid or remote work situations, it’s easier to push boundaries without realizing it. AI adds fuel to that fire by making it simple to create something lighthearted in intent but harmful in impact.

Examples:

- Face-swapping a colleague into a viral meme for an inside joke

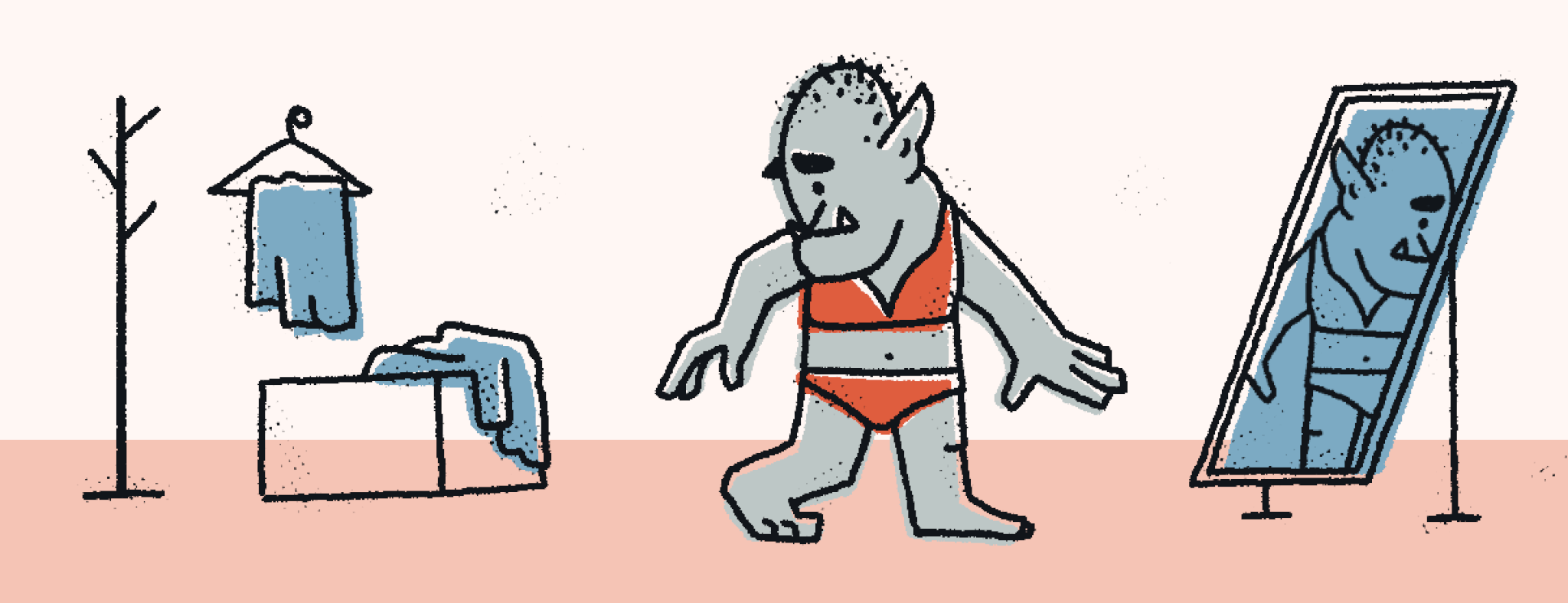

- Using an AI avatar tool to cartoon-ify a coworker and “dress” them in ways they’re not comfortable with

- Voice-cloning a teammate to deliver a fake “announcement” in chat that could land poorly

These moments usually play out in shared spaces like Slack or group chats. What feels like casual fun in the moment can still create very real legal and cultural risk. They also overlap with online harassment risks and the blurred boundaries of hybrid or remote work.

When AI Harassment Crosses Into Clear Misconduct

Then there are behaviors that almost always fall on the wrong side of the line:

- Creating sexualized deepfakes of a colleague.

- Using AI voice cloning to impersonate someone in a scam or to send harassing messages.

- Using AI to dig up and share personal information without consent.

These actions are already prohibited under most harassment, bullying, and misconduct policies. They’re also a reminder that harassment prevention training requirements need to evolve alongside technology, so employees understand that digital and AI-driven misconduct is covered just as much as in-person interactions. AI doesn’t make these behaviors any less wrong. It just makes them easier to pull off and harder to detect.

And for workplaces, it’s important to connect this to other forms of bullying and discrimination that can surface in digital environments.

Update Policies Now to Stay Ahead of AI Misuse

AI may not have rewritten the rulebook, but it’s a good excuse to flip back through it and see if you’re covering the changing realities of today’s employee interactions and the technology at their disposal.

Consider:

- Policy updates: Call out AI as one of several tools that could be used for misconduct. We have some addendums at the bottom of this blog post that you can drop directly into your existing harassment policy.

- Training scenarios: Include relatable gray-area examples, like using an AI filter to make a meme of a coworker, so people understand where “fun” ends and harm begins.

- Encourage dialogues that lead to insights: Make it safe for employees to ask, “Would this be okay?” before something goes sideways. Some teams even use AI policy bots or dedicated Slack channels to field these kinds of questions in real time. Policy bots can even report on what employees are actually asking, giving leaders analytics on top questions, common categories, and shifting concerns over time.

Why Add a Forward-Looking Clause?

AI is moving fast, and your policy won’t magically update itself every time a new feature launches. A forward-looking clause keeps you covered without needing constant rewrites. It also sends a clear message: the same behavior standards apply, no matter the tool.

Here are two ready-to-go clauses you can add to your policy now to protect your teams from future risk:

Use of Artificial Intelligence (AI) in Relation to Harassment and Misconduct

“Employees are prohibited from using artificial intelligence (AI) tools or technologies to create, alter, or distribute content—such as images, audio, video, text, or other media—that targets, harasses, bullies, or discriminates against any individual. This includes, but is not limited to, AI-generated depictions of colleagues or others in a false, sexual, offensive, or otherwise inappropriate context; impersonating another person using AI tools; or using AI to gather or disclose personal information without consent. All AI-related conduct is subject to the same standards and consequences as any other form of harassment or misconduct under this policy.”

Optional Forward-Looking Clause

“This policy applies to all current and future uses of AI, including technologies not yet widely available or publicly released. Any use of AI tools or systems, whether internal or external to the organization, that results in conduct inconsistent with our harassment prevention standards is prohibited.”

Why Acting Now Protects Your Culture

AI hasn’t changed what harassment is, it’s just made it easier to get there.

By calling out AI in your policies, addressing the gray areas, and giving people real examples, you protect your employees, your culture, and your credibility. And the best time to set those expectations is before someone accidentally (or intentionally) crosses the line.

Bonus: we’ve got a free AI policy template ready for you to adapt and roll out to your organization, while you work on updating your harassment policy (and maybe some additional training).