AI is influencing how organizations handle some of the most legally sensitive information they hold: privileged communications.

Under pressure to leverage AI for efficiency, Legal and HR teams are using it to draft investigation summaries, write policies, and even prep legal analysis. But most of these tools aren’t built for privilege protection. In fact, many are structured in ways that could accidentally waive privilege, leak confidential information, or create new compliance gaps.

Right now, the risk is highest in areas where AI intersects with sensitive, privileged, or confidential workflows. And with courts not yet ruling on this, what you do now—your workflows, contracts, training—will define your organization’s exposure.

5 Moves to Protect Privilege and Privacy in the AI Era

1. Choose Enterprise Tools and Read the Fine Print

Before you let any AI tool into a workflow involving sensitive or privileged data, read the contract like a lawyer (or better yet, with one). Not all enterprise tools offer the same level of protection, and some business-grade versions allow data access, sharing, or retention that can create major risk.

Keep your data under control:

- Stick to enterprise-grade AI platforms that offer commercial terms of service with explicit data protections.

- Confirm the contract prohibits your data from being used to train models and review these settings regularly, as terms can change.

- Validate how long data is retained, who has access to it, and whether any information can be accessed across users or customers.

- Don’t assume a "business account" is enough. There are often multiple tiers of enterprise licensing, and the right protections may only come at the top levels.

2. Know When Privilege Applies (and When It Doesn’t)

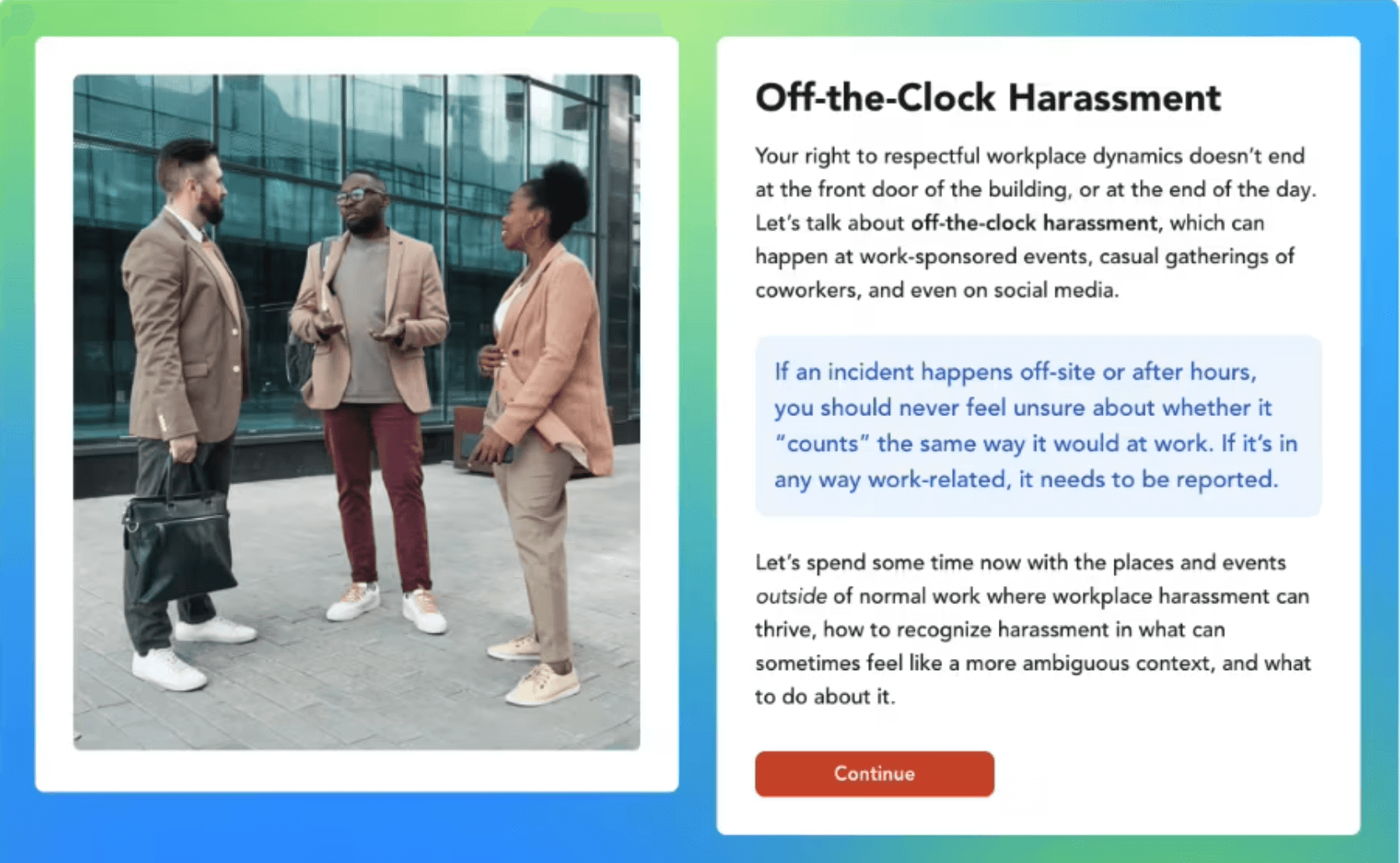

Just labeling a document "privileged" isn’t enough. Courts will examine the context — who was involved, what was discussed, and whether it was truly a request for legal advice. If AI is misused in these workflows, even unintentionally, a protected exchange can quickly turn into a waived one.

The basics of privilege:

- For communications to be privileged, they must be made in confidence between privileged parties (e.g., attorney and client) and for the purpose of legal advice.

- If an AI system is inserted into that workflow, it could be considered a third party unless your tool and contract are airtight.

- Make sure employees understand that even if something feels sensitive or legal-adjacent, it may not meet the legal threshold for privilege.

Pro tip: Create a decision tree or checklist to help teams assess whether a given communication should be treated as privileged.

3. Protect Inputs and Outputs

Most guidance focuses on inputs, i.e., what not to feed into an AI tool. But outputs matter just as much. If an AI summary is based on privileged content, it can inherit that sensitivity. Failing to manage either end of the workflow can break privilege while widening exposure.

Tips to avoid accidentally losing privilege:

- Avoid putting investigation details, legal memos, or confidential correspondence into AI tools unless you’ve vetted the provider and locked down settings.

- Treat AI-generated outputs as extensions of the data they’re based on. If it was built from privileged inputs, handle the result as though it’s privileged too.

- Implement sharing protocols that limit both inputs and outputs to those with a clear, documented need-to-know.

- Reinforce this guidance in onboarding, templates, and internal wikis. A one-time training won’t stick unless it's a part of how the team works.

4. Configure Internal Controls Thoughtfully

You can have the strongest AI contract on the market and still lose control if your internal systems are poorly configured. From automated meeting summaries to shared folders, the small details often create the biggest risks.

How to keep your internal environment locked down:

- Disable default sharing or auto-save settings in tools like Zoom, Teams, or Google Meet if they generate AI summaries of sensitive meetings.

- Routinely audit document permissions in shared drives, AI tools, and communications platforms to ensure only essential personnel can access privileged content.

- Create a protocol for regularly reviewing access logs and have a plan for what to do if information is exposed.

- Consider implementing "minimum necessary" access principles as a baseline for any tool that touches sensitive data.

5. Tailor Policies to Risk, Not Just Regulation

Policy templates are a start, but they won’t be enough in a fast-evolving risk environment. You need AI policies that reflect your company’s actual data, workflows, risk tolerance, and use cases.

How to begin crafting for your AI policy:

- Define specific dos and don'ts for AI usage by legal, HR, and compliance teams.

- Clarify approved tools, workflows that are off-limits, and escalation paths for questions.

- Train employees on appropriate, legal, and ethical AI usage.

- Revisit your AI usage and privacy policies at least quarterly. Tech changes fast, and so will your risk landscape.

Pro tip: Our AI policy template is a great starting point for outlining generative AI best practices for your organization.

The Bottom Line

AI is rewriting how legal, HR, and compliance teams work. But it’s not rewriting the rules of privilege… yet.

Until courts offer clearer guidance, the best protection is preparation. That means:

- Choosing your tools carefully.

- Training employees thoroughly.

- Auditing your workflows regularly.

Most of all, it means resisting the temptation to default to what’s fast or free. When privilege is on the line, clarity beats convenience every time.

Disclaimer: This content is provided for informational purposes only. It is not a substitute for legal advice and should not be relied upon as a standalone resource. Please contact your legal counsel for guidance on how the content of this article may apply to your organization.